Testing: Risk-First Development, part 1/2

Some history: risk-based testing

At “EuroSTAR 1999” in Barcelona, Ståle Amland’s presentation and paper entitled “Risk Based Testing and Metrics” was awarded the prestigious “Best Paper Award”. Three years later, I had the pleasure to deliver “Risk Based Test Management” tutorial at EuroSTAR 2002. “Risk based” was a very popular notion then, and Hans Schaefer frequently left his windy island near Bergen in Norway and travelled the world with his high-quality presentations on this subject.

However, the roots of considerable confusion were already visible then as well. Risk based testing is only a part of more general risk based development, and of risk management, and cannot sensibly be treated as a totally separate domain.

This confusion was, and still is, strikingly visible in ISTQB syllabi. In chapter 5.5 of ISTQB Foundation Level syllabus (version 2011), the subjects of general risk management and risk based testing are thoroughly mixed up. Instead of entering into what risk-based testing (or more generally, risk based development) really is, as we do here in “Risk is essential, but sadly neglected”, the syllabus concentrates on the difference between… product and project risk. I wonder what the latter has to do with testing, perhaps testing project members for the presence of flu viruses.

Sarcasm aside, in my opinion, the huge potential of risk based approach had petered away before it became really widespread. No wonder: the world was then too preoccupied with what officially started on 13 February 2001: the agile movement.

Another history: agile-driven reversal of waterfall sequence

Agile-like approach is far older than most 25-years old enthusiasts of lean startup and continuous integration realize: it was first defined in Tom Gilb’s “Evolutionary Project Management” (EVO) already in 70’s, and re-appeared to some extent in every iterative methodology since then.

However, two factors gave XP, TDD and agile real kick forward. They were the Internet-based process re-engineering revolution of late 90’s and early 2000’s, and the spread of mobile technologies and smartphones. The need to create working applications very fast, without really knowing in advance the business process they were supposed to support, resulted in full-blown, comprehensive agile revolution.

However, the essence of TDD / XP / agile is not “working without requirements”, which should be treated more like necessary evil rather than a goal, but the reversal of the traditional plan → design → code → test sequence into test-first approach, superior in most respects, even if the requirements are well-known in advance.

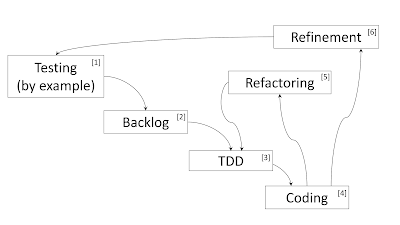

Figure 1. Test-first approach

Tests, or test cases are essential part of requirements – in agile terminology, they are called acceptance criteria, or acceptance scenarios, or examples [1]. They come first, helping specify precisely imprecise user stories in product backlog [2]. If TDD is used as well, unit level test cases are then created [3], before coding starts [4]. If architecture is wrong, ad hoc, or non-existent, it can be improved using refactoring [5]. What if the implemented requirements are wrong? Well, they can be changed and then re-defined [6] and re-implemented in the next loop of agile development process.

This picture is of course an oversimplification, but it shows clearly the benefits, and superiority, of “test-first” approached, compared to traditional, sequential model.

Risk is essential, but sadly neglected

Sadly, this great leap in process quality, offered by test-first, agile approach, was followed by a relative decline in the realization of the real importance of risk-based development and testing.

The most blatant example of this, is the total lack of any reference to risk (neither consequence nor probability of failures) in agile scrum backlog items and in the agenda for sprint planning meetings. It looks like agile practitioners and scrum team members are expected somehow to absorb risk knowledge from thin air, without really learning it nor taking it into consideration.

In both agile and traditional projects, the notorious difficulties of deciding requirements’ priorities are convincing symptoms of the lack of any risk reverie before. Traditional methods try to achieve prioritizing by coercion, like imposing MoSCoW, and agile framework, by repeatedly asking what product owner really wants, if there is not enough time for both. But this may cure the symptoms only, not the underlying cause, which is the lack of risk analysis at the beginning.

However, an interesting and ambitious attempt to amend this, has already appeared: this is risk-based testing for agile projects, including risk poker, by Erik van Veenendaal. Google it!

Being a freelancer, I am often expected to answer absurd questions, like “how many testers should there be in a scrum team?”, or “how much testing do we need?” My automatic answer is “twenty percent”, which is popular and sometimes even right. However, in reality it depends on the risk. For nuclear plant control software, or some other safety-critical software, testing (or, more exactly, quality assurance) may well exceed 100% of all other costs. For a mobile game, where user may sometimes even expect and enjoy finding some bugs, less than 5% of QA cost may be quite rational.

So, risk-based testing is always there, even if its name is no longer popular; it is behind every decision concerning how thorough, controlled, and detailed the actual development process (including testing) must be.

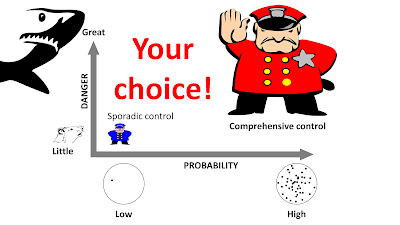

On the figure below, I try to illustrate this simple yet basic idea.

Figure 2 Risk and QA

Testing and beginning with risk: the rationale

How is risk used in real IT projects? Typically, after some haggling about needs and requirements, development – agile and test-driven, or traditional – starts. No one asks the question, what process to use? – the process is simply there already. The decision, how much testing, or how thorough requirements verification, is needed, is taken ad hoc, and not based on risk analysis. I have participated in hundreds of agile scrum sprint planning meetings and – with few (too few) notable exceptions – never ever has the issue of risk been discussed. Definition of Done has typically been the same, regardless of feature criticality. During the second half of the planning meeting, planning test tasks was 100% focused on technicalities, not on HOW MUCH TESTING WAS NEEDED. Quality criteria for product backlog items sadly lack “risk level”.

In sequential projects, or on the highest, sequential level of project management for agile development, the same sad picture appears: methods, processes, the relative amount of requirements analysis or modelling, change management process (how much time to spend on impact analysis?), how much testing, what test design techniques – all that is decided before any risk analysis has started. Which is of course totally wrong – you cannot decide such issues correctly, unless you take potential failure consequences, and mistake → bug → failure probability, into the account.

How come so many projects have been quite successful? Well, we are smart intuitive creatures, and besides, poor quality and embarrassing failures have become a socially accepted norm: IT people feel no longer ashamed of them.

There is little room here to present a full list of arguments and steps, so I’ll leapfrog directly to my final vision, how it should be done in a far better way: risk-first development.

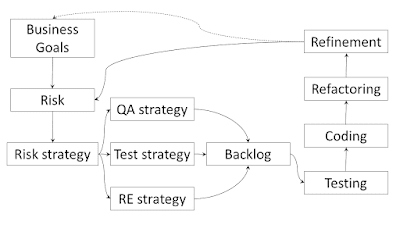

Figure 3. Risk-first development

You start with risks, which are identified and analysed for your business goals. Well, yes, that means your business goals must be clear – hard, isn’t it?

At the beginning, you have a fair chance of identifying correctly risk consequences, but less so risk probabilities, because they depend to a large extent on technical issues, which are not known yet.

Only knowing more or less your risks, and your business goals, you can decide on the risk strategy you choose. Should it be aggressive, maximizing possible profit, at the cost of accepting higher failure probability, or should it rather be cautious, minimizing failure probability, but renouncing impressive potential profits?

Risk strategy is the basis for deciding QA strategy – how carefully, how thoroughly you choose to work, how much to document, analyse, check and double-check? Knowing this, you can distribute the QA effort among requirements engineering (“RE strategy” on the figure above), testing (“Test strategy”), configuration and change management, etc.

Then, and only then, does it make sense to start building product backlog(or requirements specification, or whatever you call this in your process). Based on already defined business risks, you’re better equipped to define risk levels for individual backlog items / requirements.

No, this approach does not mean you need to spend months before being allowed to start actual work. You choose the time you spend on performing the six steps preceding backlog building yourself. Depending on the risk (sure enough), you may choose any time box, from one day to half a year, but, by all means, do it. Using a pre-defined process, disregarding actual project needs and specific product-related risks, is wrong. Starting a project without knowing your business goals, is the most common reason for project failures (“The Standish Group Chaos Report”).